SONIC: Sonar Image Correspondence using Pose Supervised Learning for Imaging Sonars

Samiran Gode*, Akshay Hinduja*, Michael Kaess

ICRA 2024

Abstract

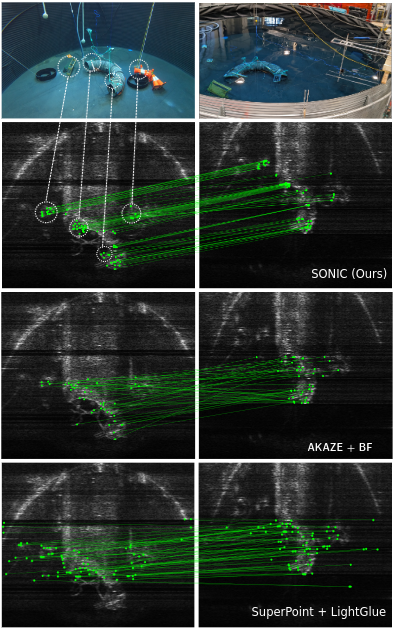

In this paper, we address the challenging problem of data association for underwater SLAM through a novel method for sonar image correspondence using learned features. We introduce SONIC (SONar Image Correspondence), a pose-supervised network designed to yield robust feature correspondence capable of withstanding viewpoint variations. The inherent complexity of the underwater environment stems from the dynamic and frequently limited visibility conditions, restricting vision to a few meters of often featureless expanses. This makes camera-based systems suboptimal in most open water application scenarios. Consequently, multibeam imaging sonars emerge as the preferred choice for perception sensors. However, they too are not without their limitations. While imaging sonars offer superior long-range visibility compared to cameras, their measurements can appear different from varying viewpoints. This inherent variability presents formidable challenges in data association, particularly for feature-based methods. Our method demonstrates significantly better performance in generating correspondences for sonar images which will pave the way for more accurate loop closure constraints and sonar-based place recognition. Code as well as simulated and real-world datasets will be made public to facilitate further development in the field.

Citation

S Gode*, A Hinduja*, and M Kaess , "SONIC: Sonar Image Correspondence using Pose Supervised Learning for Imaging Sonars" in

Proc. IEEE Intl. Conf. on Robotics and Automation (ICRA), Yokohama, Japan,

May 2024.